Legal Insights on AI Roleplay for Adults

Understand legal aspects of AI roleplay 18+, including age verification, privacy, and ethical usage. Learn how to ensure compliance and safe interactions.

AI roleplay 18+ opens up new possibilities for creative interaction, but it also introduces complex legal challenges. For example, the rapid evolution of AI has heightened concerns about copyright infringement and intellectual property disputes. Clear guidelines are essential to ensure AI systems respect creative ownership.

Age verification is equally critical. A survey in France found that 44% of individuals aged 11–18 admitted to lying about their age online, highlighting the risks of minors accessing adult platforms. Current age estimation technologies, with an error margin of two years, further complicate this issue. These realities underscore the need for robust safeguards to protect users and ensure compliance.

Understanding AI Roleplay 18+

What Is AI Roleplay

AI roleplay 18+ refers to interactive experiences where artificial intelligence simulates characters, scenarios, or conversations tailored to adult audiences. These platforms use advanced AI models to create dynamic and engaging interactions. For example, users can engage with AI-driven characters in storytelling, problem-solving, or even therapeutic contexts. The technology adapts to user input, making each interaction unique and personalized.

A key feature of AI roleplay is its ability to provide consistency and scalability. Recent case studies highlight how AI ensures uniformity in roleplay experiences while adapting to individual preferences. This dynamic training capability allows users to explore various scenarios, whether for entertainment or skill development. The result is a versatile tool that caters to diverse needs.

Why AI Roleplay Appeals to Adults

AI roleplay 18+ resonates with adults for several reasons. Many users seek companionship and emotional support, finding solace in meaningful conversations with AI. These platforms offer a non-judgmental space where users can express themselves freely. For those without access to professional therapy, AI roleplay becomes an alternative source of comfort.

The growing interest in AI roleplay is evident in its user statistics. Platforms like Character.AI attract over 28 million monthly active users, with individuals spending an average of two hours per session. Younger adults, particularly those aged 18-24, make up 43.02% of the user base, followed by 29.40% in the 25-34 age group. This trend underscores the appeal of AI roleplay as a tool for connection and exploration.

Risks and Challenges in AI Roleplay

While AI roleplay 18+ offers exciting possibilities, it also presents challenges. Privacy concerns are significant, as platforms collect and store user data to enhance interactions. Users may feel uneasy about how their information is used or shared. Additionally, the AI's behavior can sometimes deviate from user expectations, leading to frustration or discomfort.

Another challenge lies in maintaining ethical boundaries. Users have reported instances where AI failed to respect personal preferences, highlighting the need for better memory consistency. Developers must address these issues to ensure safe and positive experiences. As the technology evolves, balancing innovation with accountability will remain a critical focus.

Legal Considerations in AI Roleplay

Intellectual Property and AI

AI roleplay platforms often rely on vast datasets to train their models. These datasets frequently include copyrighted materials, such as books, artwork, and music. This practice raises significant legal questions about intellectual property. For instance, in 2023, artists Sarah Andersen, Kelly McKernan, and Karla Ortiz filed a lawsuit against Stability AI, Midjourney, and DeviantArt. They claimed their copyrighted works were used without consent to train AI models. Similarly, The New York Times sued OpenAI and Microsoft, alleging that their AI models unlawfully incorporated copyrighted articles into training datasets.

These cases highlight the risks of using copyrighted materials without permission. Courts are now debating whether such practices fall under fair use or constitute infringement. You should consider how these legal challenges might impact the future of AI roleplay. Developers must ensure their platforms comply with intellectual property laws to avoid lawsuits and maintain trust with users.

Privacy and Data Protection in AI Platforms

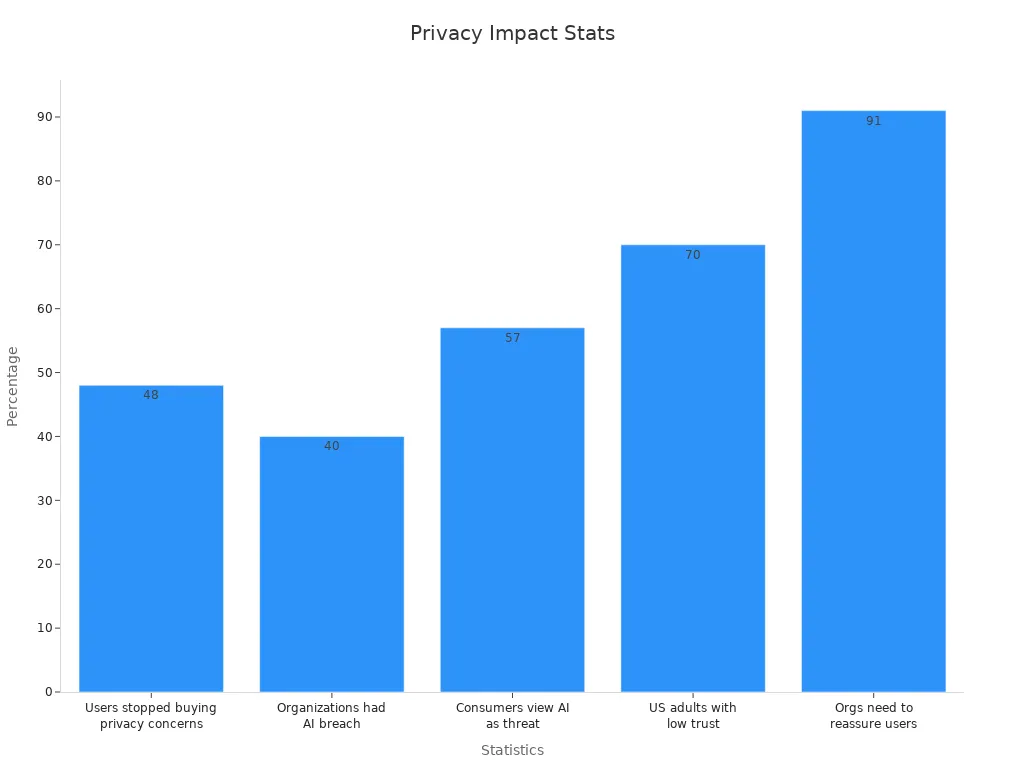

Privacy concerns are a major issue for AI roleplay platforms. These platforms collect and store user data to improve interactions, but this practice can lead to data privacy breaches. A recent study revealed that 40% of organizations using AI have experienced privacy breaches. Additionally, 57% of global consumers view AI's role in data collection as a significant threat to privacy.

When users lose trust in a platform's ability to protect their data, the consequences can be severe. Nearly 48% of users have stopped buying from companies due to privacy concerns. Developers must prioritize robust data protection measures to address these risks. Encryption, anonymization, and transparent data policies can help reassure users and meet legal requirements.

| Statistic | Percentage |

|---|---|

| Users who stopped buying from a company over privacy concerns | 48% |

| Organizations that have experienced an AI privacy breach | 40% |

| Consumers who view AI in data collection as a threat to privacy | 57% |

| US adults with little to no trust in companies using AI responsibly | 70% |

| Organizations needing to reassure customers about data use with AI | 91% |

Liability for Developers and Users

The potential to cause real-world harm through AI roleplay platforms raises questions about liability. If an AI system generates harmful or misleading content, who should be held accountable? Developers bear significant responsibility for ensuring their platforms operate safely and ethically. However, users also play a role in using these tools responsibly.

For example, if a user manipulates an AI system to produce harmful content, they could face legal consequences. Developers must implement safeguards to prevent misuse, such as content moderation and user guidelines. At the same time, you should understand the risks involved in using these platforms and adhere to ethical practices. Balancing innovation with accountability is essential to minimize harm and build trust in AI technologies.

Regional Laws Governing AI Roleplay 18+

Understanding regional laws is essential when engaging with AI roleplay platforms. Different countries have unique regulations that govern how AI systems operate, especially when they involve age restrictions and adult content. You must familiarize yourself with these laws to ensure compliance and avoid legal complications.

United States

In the United States, AI roleplay platforms must adhere to federal and state laws. The Children’s Online Privacy Protection Act (COPPA) mandates strict measures to protect minors online. Platforms must verify users' ages and prevent underage access to adult content. Violating these rules can result in hefty fines. Additionally, the Federal Trade Commission (FTC) oversees AI-related privacy concerns. You should ensure that platforms comply with FTC guidelines to safeguard user data.

California’s Consumer Privacy Act (CCPA) adds another layer of regulation. If you use AI platforms in California, you have the right to know how your data is collected and used. Developers must provide transparency and allow users to opt out of data sharing. These laws emphasize the importance of age restrictions and privacy in AI roleplay.

European Union

The European Union enforces strict regulations through the General Data Protection Regulation (GDPR). GDPR requires platforms to obtain explicit consent before collecting user data. If you interact with AI systems in the EU, you can request access to your data and demand its deletion. Platforms must also implement robust age verification methods to comply with GDPR’s focus on protecting minors.

The EU’s Artificial Intelligence Act is another critical regulation. It categorizes AI systems based on risk levels and imposes restrictions on high-risk applications. AI roleplay platforms must meet these standards to operate legally. You should check whether the platform you use complies with these laws to avoid potential issues.

Asia-Pacific

Countries in the Asia-Pacific region have diverse approaches to regulating AI. In Japan, the Act on the Protection of Personal Information (APPI) governs data privacy. If you use AI roleplay platforms in Japan, you can expect strict rules on data handling and transparency. Developers must ensure their systems align with APPI requirements.

China has introduced comprehensive AI regulations, including the Personal Information Protection Law (PIPL). These laws emphasize user consent and data security. Platforms must also implement age restrictions to prevent minors from accessing adult content. You should verify whether the platform complies with these laws before engaging with AI roleplay.

Other Regions

Other regions, such as Canada and Australia, have their own AI regulations. Canada’s Personal Information Protection and Electronic Documents Act (PIPEDA) focuses on data privacy and user consent. In Australia, the Privacy Act requires platforms to protect user data and enforce age restrictions. If you use AI roleplay tools in these countries, you must ensure the platform adheres to local laws.

Tip: Always review the terms and conditions of AI platforms to understand how they comply with regional laws. This step can help you avoid legal risks and ensure a safe experience.

Age Verification for AI Roleplay Platforms

Importance of Age Verification

Age verification plays a crucial role in ensuring the safety of users on AI roleplay platforms. These systems prevent minors from accessing adult content, which is essential for compliance with laws like COPPA in the United States and GDPR in the European Union. Without proper verification, platforms risk exposing underage users to inappropriate material, leading to legal penalties and reputational damage.

Legal studies highlight the necessity of age verification measures. For example, COPPA mandates parental consent for users under 13, while GDPR requires platforms to obtain parental consent for users under 16 (adjustable to 13 in some regions). The table below summarizes these requirements:

| Jurisdiction | Age Requirement | Consent Requirement |

|---|---|---|

| COPPA (US) | 13 | Parental consent required for under 13 |

| GDPR (EU) | 16 (can be adjusted to 13) | Parental consent required for under 16 |

| UK GDPR | 13 | Parental consent required for under 13 |

By implementing robust age verification systems, you can ensure compliance with these regulations and protect vulnerable users. This step also builds trust among users, reinforcing the platform's commitment to safety.

Effective Age Verification Methods

AI platforms use various methods to verify users' ages, each with its own strengths and limitations. Some of the most effective approaches include:

- Matching government-issued ID documents with biometric data. This method provides a high level of certainty for legal compliance.

- AI-based facial age estimation. This approach is faster and more efficient but introduces a margin of error compared to document-based systems.

- Combining multiple verification methods. Research shows that hybrid systems, which integrate document verification and AI-based estimation, offer better accuracy and reliability.

Recent studies and real-world implementations demonstrate that document-based systems outperform facial age estimation in terms of effectiveness. While AI-based methods are gaining traction, their error margins make them less reliable for platforms requiring strict compliance.

Challenges in Verifying Age

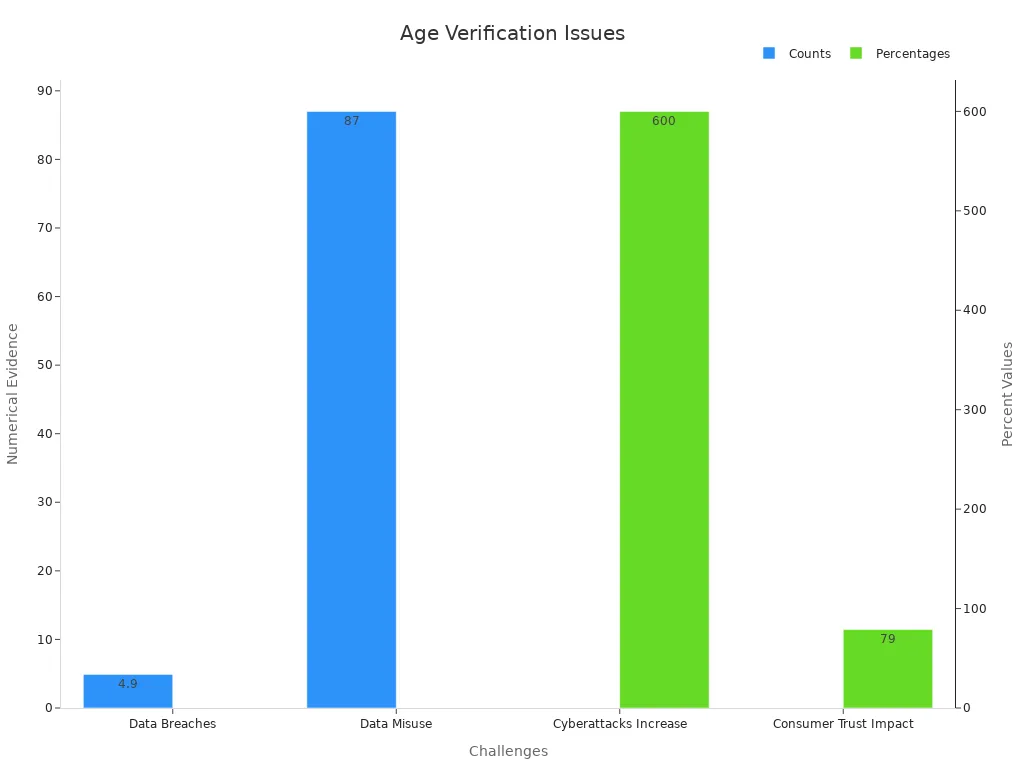

Despite the importance of age verification, implementing these systems comes with challenges. Data breaches, cyberattacks, and misuse of personal information are significant concerns. For example, DoorDash experienced a data breach in 2019 that affected 4.9 million users. Similarly, cyberattacks surged by 600% during the COVID-19 pandemic, exposing vulnerabilities in digital systems.

The table below outlines key challenges supported by statistical evidence:

| Challenge | Evidence |

|---|---|

| Data Breaches | DoorDash experienced a data breach in 2019 affecting 4.9 million users. |

| Cyberattacks Increase | Cyberattacks surged by 600% during the COVID-19 pandemic. |

| Data Misuse | The Facebook-Cambridge Analytica scandal impacted 87 million users due to data diversion. |

| Consumer Trust Impact | 79% of consumers would reconsider their brand loyalty upon discovering irresponsible data usage. |

These challenges highlight the need for robust security measures. Encryption, anonymization, and transparent data policies can mitigate risks and reassure users. By addressing these issues, you can enhance the reliability of age verification systems and maintain user trust.

Emerging Technologies for Age Verification

Emerging technologies are transforming how platforms verify user ages, making the process more accurate and secure. These advancements address common challenges like bias, privacy concerns, and reliability issues. By adopting these innovations, you can ensure compliance with legal requirements while protecting user data.

-

AI-Driven Solutions

AI plays a pivotal role in modern age verification systems. Advanced algorithms analyze user data to estimate age with remarkable precision. These systems reduce bias, ensuring fair access for users across different demographics. For example, AI can adapt to diverse facial features, improving accuracy for individuals from various ethnic backgrounds. This inclusivity strengthens trust in the verification process. -

Diverse Data Integration

Incorporating diverse datasets into AI models enhances their reliability. When systems train on a wide range of data, they perform better in real-world scenarios. This approach minimizes errors and ensures consistent results. For instance, platforms that use global datasets can accurately verify ages regardless of cultural or regional differences. You benefit from a system that works seamlessly for everyone. -

Privacy-Enhancing Technologies

Privacy remains a top concern in age verification. Technologies like federated learning and zero-knowledge proofs address this issue effectively. Federated learning allows AI models to learn from user data without storing it on central servers. This method protects sensitive information while maintaining system performance. Zero-knowledge proofs enable platforms to confirm your age without revealing personal details. These innovations ensure compliance with privacy laws and build user confidence.

Tip: Look for platforms that prioritize privacy by using these advanced technologies. They offer a safer and more secure experience.

As these technologies evolve, they will continue to improve the accuracy and security of age verification systems. By staying informed about these advancements, you can make better decisions when engaging with AI platforms.

Responsible Usage of AI Roleplay Tools

Ethical Guidelines for AI Roleplay

Practicing responsible use of AI roleplay tools begins with adhering to ethical guidelines and best practices. Developers, users, and regulators must engage in ongoing dialogue to identify emerging threats and create solutions. Staying updated on AI ethics guidelines from organizations like OpenAI ensures you remain informed about responsible use.

Respecting user privacy is essential. Avoid applications that could lead to harm, such as deepfakes or cyber-bullying and hate speech. Monitor AI outputs to detect bias or harmful content. Refining these tools continuously minimizes risks and ensures data privacy. You should also consider the potential impact of prompts, ensuring they remain ethical and respectful.

Avoiding Harmful Interactions

AI roleplay tools can unintentionally cause harm if not used responsibly. Deepfakes, for instance, can spread misinformation and disinformation, leading to inaccurate or misleading information. These tools may also perpetuate bias in AI, which can result in unfair or harmful outcomes.

To avoid harm, you should follow clear guidelines. Be mindful of how prompts influence AI responses. Avoid using the tools to create content that promotes hate or cyber-bullying. Developers must implement safeguards to prevent misuse, while users should practice responsible use by adhering to ethical standards.

Promoting Safe and Positive Engagement

Promoting online safety is a shared responsibility. AI roleplay platforms should foster environments that encourage positive interactions. Developers can achieve this by designing systems that prioritize ethical guidelines and best practices. Users, on the other hand, can contribute by reporting harmful content and practicing responsible use.

Encouraging inclusivity and fairness reduces bias and ensures a better experience for all users. Platforms should also focus on ensuring data privacy to build trust. By following these principles, you can help create a safer and more enjoyable space for everyone.

Balancing Innovation with Accountability

Balancing innovation with accountability in AI roleplay requires a thoughtful approach. As AI continues to evolve, you must consider how to foster creativity while ensuring ethical and responsible use. This balance is essential to prevent harm and build trust in the technology.

One way to achieve this balance is by understanding the dual-use nature of AI. While AI can drive innovation in areas like therapeutic discovery, it also poses risks if misused. Over-regulation may stifle progress, but a lack of safeguards can lead to unintended consequences. Policies must remain flexible to adapt to new technologies while addressing emerging threats. The table below highlights key aspects of balancing innovation and accountability:

| Aspect | Description |

|---|---|

| Innovation vs. Safeguards | The dual-use nature of AI in biotechnology requires a balance between fostering innovation and implementing safeguards. |

| Risks of Over-regulation | Over-regulation can stifle progress in therapeutic discovery and synthetic biology. |

| Need for Flexibility | Policies must be adaptable to evolving technologies while robust enough to address emerging threats. |

| Interdisciplinary Approach | Engagement between AI researchers, bioengineers, and security experts is essential for understanding dual-use risks. |

To ensure AI serves humanity, you should prioritize ethical development. Responsibility and ownership are crucial for developers and users alike. Transparency in how AI systems operate fosters equitable use and builds public confidence. These principles create a foundation for innovation that benefits society.

- Ethical AI ensures innovation serves humanity.

- Responsibility and ownership are crucial in AI development.

- Transparency is necessary for equitable AI use.

By embracing these practices, you can help create a future where AI roleplay thrives without compromising accountability. This approach not only supports technological progress but also safeguards the well-being of users and society.

Understanding the legal and ethical landscape of AI roleplay ensures a safer and more enjoyable experience. You should prioritize compliance with regulations, adopt robust age verification methods, and use these tools responsibly. Staying informed about advancements in AI technologies and laws helps you navigate this evolving space confidently.

To improve safety and compliance, consider these steps:

- Establish clear governance policies to define roles and responsibilities.

- Conduct regular risk assessments to identify potential non-compliance.

- Implement strong data governance practices to ensure data legality.

- Maintain transparency in AI systems to build trust.

- Strengthen cybersecurity measures to protect platforms from breaches.

- Monitor systems continuously and prepare incident response plans.

By following these practices, you can engage with AI roleplay tools ethically and securely. This approach not only protects you but also fosters trust in the technology's potential to enhance creativity and connection.

Tip: Always review platform policies and stay updated on AI regulations to ensure a safe and compliant experience.

FAQ

What legal risks should you consider when using AI roleplay platforms?

AI roleplay platforms may involve copyright infringement, privacy violations, or misuse of data. You should review the platform’s terms and conditions and ensure compliance with regional laws. Developers must also implement safeguards to prevent harmful or illegal content generation.

How can you verify the safety of your data on AI roleplay platforms?

Check if the platform uses encryption and anonymization to protect your data. Look for transparency in data policies and compliance with privacy laws like GDPR or CCPA. Platforms that prioritize user privacy build trust and reduce risks.

Are minors completely restricted from accessing AI roleplay 18+ platforms?

Minors are restricted by law, but enforcement depends on the platform’s age verification system. Robust methods like ID verification or AI-based facial estimation help prevent underage access. You should choose platforms that comply with legal requirements for age restrictions.

What should you do if an AI roleplay platform generates harmful content?

Report harmful content immediately to the platform’s support team. Developers must implement moderation tools to prevent misuse. You should also avoid prompts that could lead to unethical or harmful outputs.

Can AI roleplay platforms be used for therapeutic purposes?

Yes, AI roleplay platforms can provide emotional support and simulate therapeutic scenarios. However, they cannot replace professional therapy. You should use them as supplementary tools while seeking guidance from licensed therapists for serious concerns.

See Also

The Unfiltered Power of AI Roleplay to Ignite Creativity

Discovering Top NSFW AI Platforms for Engaging Roleplay

A Comprehensive 2025 Overview of NSFW AI Options