How Society Is Responding to AI-Generated Undressing Apps

Undressed AI porn raises privacy and ethical concerns. Learn how society is responding with legal reforms, advocacy, and protections against misuse in 2025.

The rise of undressed AI porn has sparked intense debates about privacy and ethics. These apps exploit AI to create invasive content, often without consent. High-profile data breaches and unauthorized use of personal information highlight how vulnerable your privacy becomes when AI technologies are misused. Without proper safeguards, these tools can lead to severe violations, including the misuse of biometric data. The urgency to address these issues stems from the ethical dilemmas they pose, such as balancing personal freedom with security. You must remain vigilant as society navigates these challenges.

Public Awareness and Advocacy

Growing Concerns About Undress AI Apps

The rapid rise of undress ai apps has sparked widespread concern. These tools misuse ai to create non-consensual intimate imagery (NCII), often targeting celebrities and individuals without their knowledge. This exploitation highlights the urgent need for public awareness and stronger legal frameworks. In 2023, websites using "deepnude" technology saw a staggering 2000% increase in traffic, attracting nearly 24 million unique visitors. This surge reflects not only public curiosity but also the alarming scale of misuse. Discussions about privacy and the societal impact of these apps have intensified, emphasizing the need for immediate action.

Advocacy for Privacy Protections

Advocacy groups and privacy experts are working tirelessly to address the challenges posed by undress ai apps. They emphasize the importance of adopting Privacy by Design (PbD) principles to safeguard personal data. Surveys show that stakeholders' attitudes and practices significantly influence the success of these initiatives. Sharing success stories has proven effective in fostering a culture of privacy awareness. By documenting and disseminating these narratives, advocates aim to inspire individuals and organizations to prioritize privacy protections. These efforts highlight the critical role of education and collaboration in combating the misuse of ai technologies.

Societal Reactions to AI Misuse

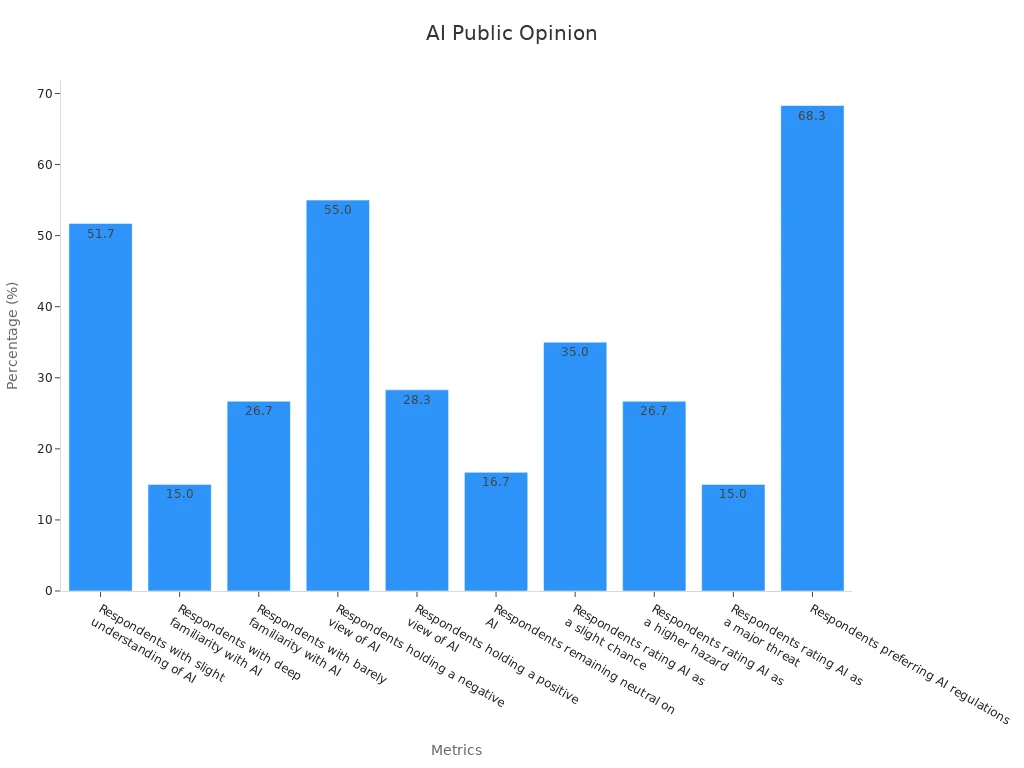

Public opinion on ai misuse reveals a mix of concern and demand for regulation. A recent survey found that 55% of respondents hold a negative view of ai, while 68.3% support stricter regulations. The chart below illustrates these trends:

These statistics underscore the growing awareness of the risks associated with undress ai apps. As more people recognize the potential harm, societal pressure on governments and tech companies to act continues to grow. This collective response demonstrates the power of public advocacy in shaping policies and promoting ethical ai use.

Legal Challenges and Ethical Dilemmas

Privacy Violations and Legal Gaps

AI-generated tools have exposed significant gaps in privacy protections. These tools often operate in a legal gray area, leaving your privacy rights vulnerable. Several challenges make it difficult to regulate AI effectively:

- Algorithmic opacity prevents you from understanding how your data is used or altered.

- The lack of a federal privacy framework in the U.S. results in inconsistent state laws, complicating enforcement.

- Data repurposing allows companies to use your information beyond its original purpose without your consent.

- Data spillovers mean AI can collect information about you even if you didn’t agree to it.

- Digital data persistence makes it hard to delete sensitive information, leaving it exposed for years.

These issues highlight the urgent need for comprehensive legal protections to address the misuse of AI technologies and safeguard your privacy and dignity.

Ethical Issues in AI-Generated Content

AI-generated content raises serious ethical concerns, especially when it involves non-consensual content like fake nude images. A 2021 survey revealed that 72% of consumers value companies that request consent before using their data. This shows how important transparency and ethics are in building trust. However, scandals like Facebook-Cambridge Analytica demonstrate the damage caused by privacy violations, with Facebook experiencing an 8% drop in trust after the incident. Companies with clear AI policies, on the other hand, saw a 15% increase in consumer trust in 2022. These findings emphasize the need for ethical AI practices to prevent online harassment and protect your privacy rights.

Legislative Efforts to Combat Undressed AI Porn

Governments are taking steps to combat the non-consensual spread of intimate images created by AI. New York passed a law in 2019 making it illegal to share intimate images without consent. California’s SB 981 requires social media platforms to provide tools for reporting fake non-consensual porn. Senator Ted Cruz proposed the Take It Down Act, which mandates the removal of non-consensual content within 48 hours of a victim’s request. Massachusetts introduced a revenge porn bill that criminalizes AI-generated pornography, with penalties including up to 10 years in prison. The updated Violence Against Women Act now includes provisions for victims of AI-generated abuse, allowing them to seek damages. These legislative efforts aim to protect women and others from sextortion and the harmful impact of AI-generated abuse.

Technology Companies and Social Media Platforms

Responsibilities of AI Developers

AI developers play a critical role in addressing the ethical and privacy challenges posed by undress AI apps. You should expect developers to implement robust privacy protocols, such as adhering to regulations like the General Data Protection Regulation (GDPR) or the California Consumer Privacy Act (CCPA). These measures protect your personal data from unauthorized access. Developers must also prioritize data encryption and secure storage to prevent breaches.

Informed consent is another essential responsibility. AI systems should operate transparently, ensuring you understand how your data is used and giving you control over it. Regular audits of AI systems help identify and resolve ethical issues, such as bias or transparency problems. Companies like Google and Microsoft have set examples by emphasizing fairness and accountability in their AI ethics principles. Google focuses on privacy and transparency, while Microsoft works to mitigate bias and improve explainability. These efforts demonstrate how developers can align AI technologies with ethical standards to minimize harm.

Social Media Policies on AI-Generated Content

Social media platforms significantly influence how AI-generated content is regulated. Platforms like Facebook have introduced transparency initiatives, such as the "Why am I seeing this ad?" feature, to help you understand how algorithms work. These companies also invest in diverse research teams to evaluate the societal impact of AI products. By incorporating local expertise, they address biases and ensure AI technologies serve a global audience effectively.

However, many platforms still struggle to enforce policies against harmful AI-generated content. You may notice that reporting tools for non-consensual imagery often lack efficiency, leaving victims vulnerable. Stronger policies and better enforcement mechanisms are needed to protect users and uphold ethical standards.

Challenges in Regulating Undress AI Apps

Regulating undress AI apps presents unique challenges. Studies, such as the one by Buolamwini and Gebru (2018), reveal significant disparities in facial recognition accuracy across genders and skin tones. These inaccuracies lead to discriminatory outcomes, particularly for marginalized groups. The erosion of personal autonomy in algorithmic decision-making further complicates regulation. You might feel a false sense of control when interacting with AI systems, which can result in ethical violations.

The persistence of digital data also makes it difficult to remove harmful content once it spreads. Governments and tech companies must collaborate to create comprehensive frameworks that address these issues. Without such efforts, the harmful impact of undress AI apps will continue to grow, disproportionately affecting women and other vulnerable groups.

Protecting Privacy and Combating Misuse

Individual Strategies for Privacy Protection

You can take several steps to protect your privacy and maintain online safety in the face of AI misuse. Start by limiting the amount of personal information you share on social media platforms. Adjust your privacy settings to restrict access to your photos and posts. Using tools like InfoTracer can also help detect and prevent the misuse of your data.

InfoTracer demonstrates significant detection capabilities, even with limited data, achieving p-values below 0.05. This makes it a reliable tool for identifying potential threats to your privacy.

Additionally, you have the right to opt out of certain disclosures of your personal information. Financial institutions must disclose their privacy policies, ensuring you stay informed about how your data is used. These safeguards empower you to take control of your online presence and reduce the risk of your intimate images being exploited.

Educating Parents and Communities

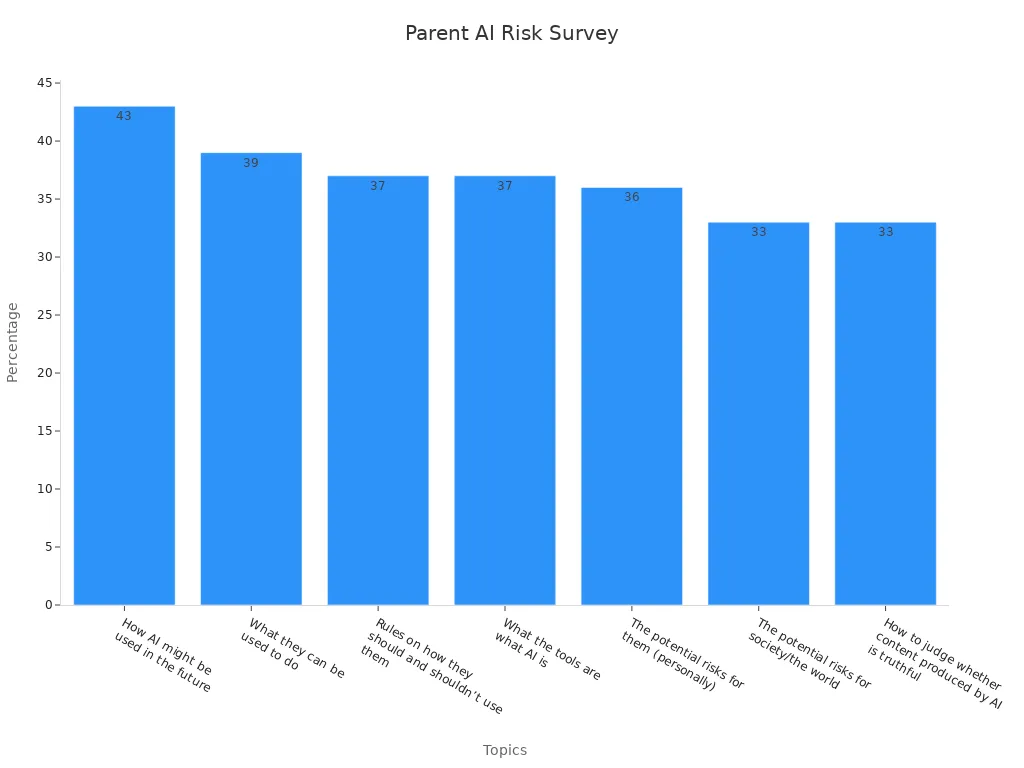

Educating parents and communities about AI misuse is essential for promoting online safety. Many parents remain unaware of the risks posed by AI-generated content, including fake nude images. A recent survey highlights the topics parents discuss with their children regarding AI:

| Topic of conversation | Percentage of parents (%) |

|---|---|

| How AI might be used in the future | 43% |

| What they can be used to do | 39% |

| Rules on how they should and shouldn’t use them | 37% |

| What the tools are/what AI is | 37% |

| The potential risks for them (personally) | 36% |

| The potential risks for society/the world | 33% |

| How to judge whether content produced by AI is truthful | 33% |

These statistics reveal the need for greater awareness about AI's impact on online safety. By fostering open conversations, you can help your community understand the risks and adopt better practices to protect their dignity and privacy.

Organizational Efforts to Address AI Misuse

Organizations play a vital role in combating the misuse of AI technologies. Many companies now implement robust risk-management frameworks to address AI-generated image abuse. These frameworks enhance trust among employees and stakeholders, ensuring ethical practices are followed.

- Robust risk-management frameworks prevent AI misuse and build trust.

- Stakeholder engagement supports AI-driven strategies for sustainability and ethics.

Studies like Heilinger et al. (2024) emphasize the importance of collaboration in addressing AI misuse. By working together, organizations can create safeguards that protect vulnerable groups, including women, from the harmful effects of fake nude images. These efforts highlight the collective responsibility of businesses to uphold ethics and promote online safety.

Society has taken significant steps to address the impact of AI-generated undressing apps. Public awareness campaigns, legal reforms, and corporate actions have all contributed to combating this misuse. Governments, companies, and communities must collaborate to protect privacy and uphold ethics. Public-private initiatives, such as task forces involving law enforcement and tech companies, have proven effective in enhancing data protection. These efforts not only address immediate concerns but also drive discussions on legislative changes. Continued vigilance and proactive measures are essential to tackle the evolving challenges posed by AI technologies.

FAQ

What are undress AI apps, and why are they harmful?

Undress AI apps use artificial intelligence to create fake nude images of individuals without their consent. These apps violate privacy and dignity, often leading to emotional distress and reputational damage. Their misuse highlights the need for stronger privacy protections and ethical AI development.

How can you report non-consensual AI-generated content?

You can report such content through social media platforms' reporting tools or contact local law enforcement. Some platforms provide specific options for reporting fake intimate images. Always document the evidence before reporting to strengthen your case.

Are there laws against AI-generated undressing apps?

Yes, some regions have laws addressing this issue. For example, California’s SB 981 and New York’s 2019 law criminalize sharing non-consensual intimate images. However, legal gaps still exist, and advocacy for comprehensive legislation continues.

How can you protect your photos from being misused by AI?

Limit the personal information and photos you share online. Adjust privacy settings on social media to restrict access. Use tools like reverse image search to monitor misuse. Staying vigilant about your digital footprint helps reduce risks.

What role do technology companies play in combating AI misuse?

Technology companies must implement ethical AI practices, such as transparency and informed consent. They should also develop robust reporting tools and collaborate with governments to regulate harmful AI applications. Their proactive efforts can significantly reduce misuse.

See Also

10 Best NSFW AI Chat Apps Competing With Character AI

Explore NSFW Character AI Options for 2025

Creating Your Personalized Uncensored AI Girlfriend in 2025