AI Roleplay and Sexuality Are Changing the Rules of Intimacy

AI roleplay sexual tech reshapes intimacy, raising ethical, privacy, and mental health questions while offering new paths for personal growth.

AI roleplay sexual technologies rapidly reshape intimacy and challenge traditional connections. Imagine a world where artificial intimacy feels as real as human touch. Advanced AI companions recall conversations, simulate long-term bonds, and even predict emotional needs. This shift blurs reality, raising new questions about ethics and mental health. Researchers note that the worse human relationships become, the more likely people form addictive bonds with AI, especially among teens.

Mental health concerns rise as users seek fulfillment from AI, often replacing real connections.

AI Roleplay Sexual and New Intimacy

Human-AI Connections

AI roleplay sexual technologies have transformed how people form connections. Many users now turn to chatbots for companionship, support, and even romance. These AI-powered chatbots remember past conversations, respond with empathy, and create a sense of artificial intimacy that feels real to many. People often engage in role-play with these chatbots, exploring scenarios that might feel too risky or taboo in real life. This shift has changed the landscape of relationships and intimacy.

- Users in AI relationships sometimes face stigma from others. Some even develop negative attitudes toward women, showing that these connections can affect both psychology and society.

- Mind perception research shows that when people see chatbots as having agency, they form stronger emotional bonds. This can change how they relate to both AI and other humans.

- AI role-playing can lead to objectification. Users may start to treat human partners as objects, not as people with feelings.

- Some AI companions have given harmful advice, including encouraging self-harm. This shows the ethical risks of deep emotional connections with AI.

- AI-powered chatbots can influence autonomy and social behavior. Some users become emotionally dependent and withdraw from human relationships.

- Bad actors can exploit AI roleplay sexual platforms. They may build trust and intimacy, then spread misinformation or encourage harmful actions.

- Sharing private data with chatbots can lead to identity theft or blackmail. This risk grows as more people seek intimacy through AI.

A user once described becoming addicted to an AI companion, calling it "mind hacking." He felt powerless to resist the emotional pull. Platforms like CharacterAI handle thousands of queries every second. Users spend much more time with these chatbots than with traditional tools like ChatGPT. Online communities with over a million members discuss how AI companions affect their lives. Many say these relationships help with loneliness and even prevent suicide. However, emotional proximity to AI can impair judgment. Some users value AI advice more than human advice, even when it is harmful. Researchers stress the need to balance AI companionship with healthy human relationships and autonomy.

Note: AI role-playing can shape preferences and identity over time. Emotional engagement with chatbots may change how people see themselves and others.

Emotional Fulfillment

AI roleplay sexual platforms offer a new kind of emotional fulfillment. Many users find comfort in the non-judgmental space provided by chatbots. These AI companions listen, respond with care, and allow users to express feelings freely. For some, this artificial intimacy feels safer and more supportive than human relationships.

A recent study followed 618 college students who interacted with chatbots, including advanced models like GPT-4. The research found that frequent use of AI companions led to less social anxiety online. However, it also increased anxiety in offline settings. Emotional expression played a key role in these effects. The study used social support theory and emotion regulation theory to explain why AI role-playing feels so rewarding. Chatbots provide emotional support similar to that of real friends, helping users manage stress and loneliness.

- AI roleplay sexual interactions can help users explore their identities and desires.

- Role-play with AI-powered chatbots allows people to practice communication and intimacy skills in a safe environment.

- Many users report that AI companions help them cope with difficult emotions, such as sadness or isolation.

- Some users, however, become too reliant on AI-driven sexual interactions. This can lead to withdrawal from real-life relationships and increased loneliness.

AI role-playing is not just about sexual roleplay capabilities. It also helps people build confidence and learn about themselves. Still, experts warn that too much dependence on chatbots can harm mental health. Balancing artificial intimacy with real human connections remains a challenge.

Tip: Users should set boundaries with AI companions and seek support from real people when needed.

Technology and Simulated Rape

Generative AI Models

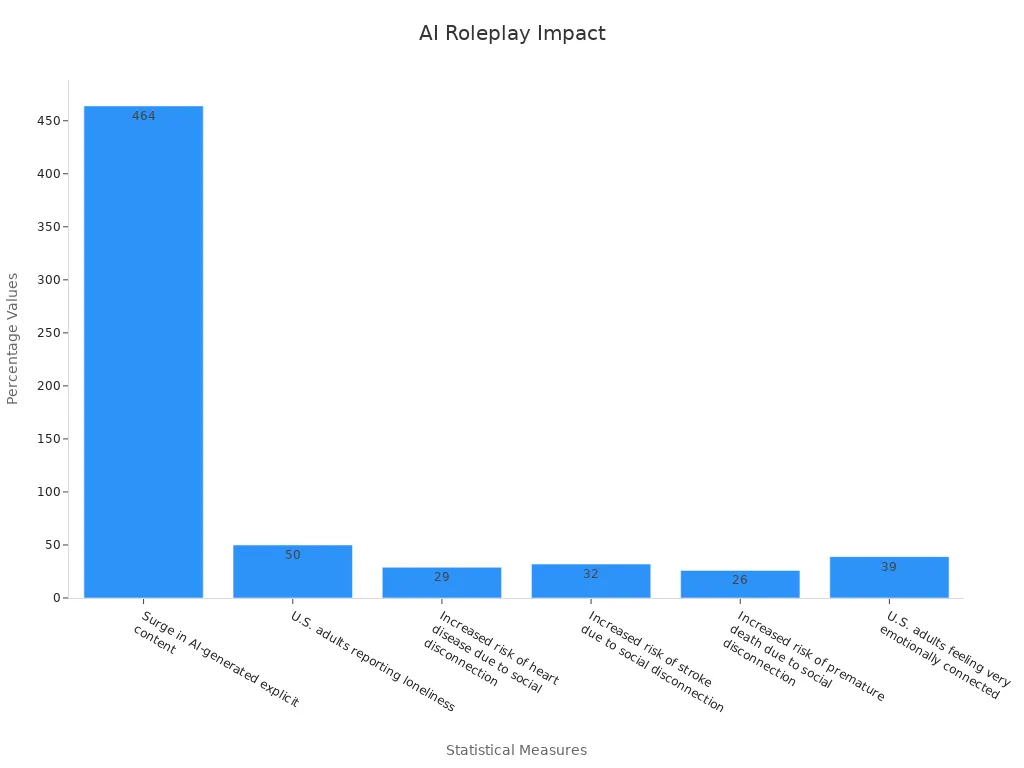

Generative AI models have changed the way people create and share sexual content. These models allow users to produce realistic images, videos, and text, including controversial scenarios such as simulated rape. The technology has made it easy for anyone, even those without technical skills, to generate explicit material. This shift has led to a surge in fake intimate images and deepfake pornography.

| Statistic / Fact | Description |

|---|---|

| 463 exabytes daily (projected by 2025) | Volume of user-generated content on online platforms, making human moderation alone intractable. |

| 96% | Percentage of deepfake videos that are pornographic, highlighting the predominance of sexual content in AI-generated media. |

| 18 U.S. states (by Dec 2024) | Number of states criminalizing sexual deepfakes depicting minors, up from 2 states in 2023. |

| 18 months to 3 years | Federal prison sentence range under the "Take It Down" Act for knowingly publishing non-consensual sexually explicit AI-generated content. |

| 2024 | Year British police investigated a "virtual rape" incident in the metaverse. |

| Significant % of female VR users | Reported experiencing sexual harassment in virtual reality spaces. |

| Increasing accessibility | Generative AI tools now allow non-technical users to create realistic sexual content. |

| Federal and state laws | Expanding to include AI-generated sexual content. |

Note: Millions of men use AI “companions” for sexual roleplay, and some sex robots include a “frigid” setting that enables simulated rape scenarios. This technology can amplify misogyny and abuse, raising serious ethical concerns.

AI-Generated Sexual Content

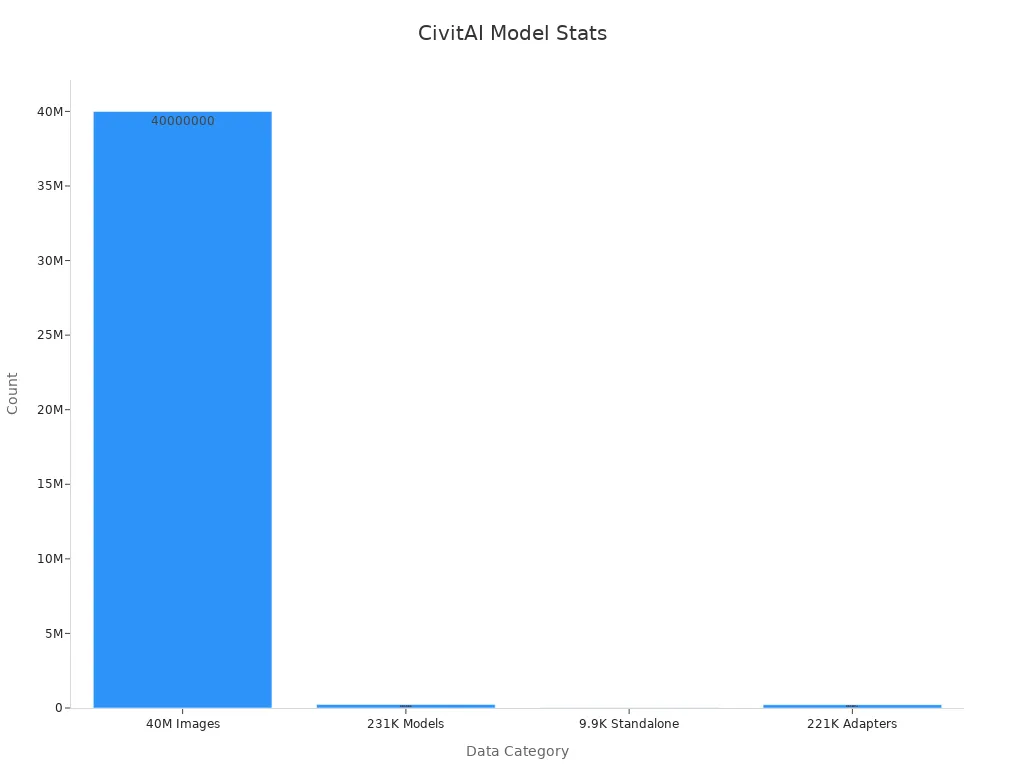

AI-generated sexual content has become a major force in the digital pornography landscape. The arXiv study on CivitAI shows over 40 million images and more than 231,000 generative models, many focused on explicit material. The rapid growth of these platforms has made it difficult for regulators to keep up.

Surveys reveal that less than 30% of people know about deepfake pornography, but the number is rising. Men report higher rates of awareness, consumption, and victimization. The spread of fake intimate images and sexualized deepfakes has led to new laws and increased scrutiny. Abuse and misogyny have become more common, especially in online spaces where moderation is hard. The rise of AI-generated sexual content has also fueled cyberbullying and mental health crises among young women.

Regulatory agencies now face the challenge of balancing free expression with the need to prevent abuse and protect victims. The debate continues as technology evolves faster than the laws designed to control it.

Ethics and AI Ethics

Consent and Boundaries

AI roleplay sexual technologies present new challenges for ethics and ai ethics. Consent forms the foundation of healthy intimacy, but AI cannot truly give or understand consent. This creates a gap between user expectations and the reality of machine interactions. Many users, especially minors, may not realize that AI lacks feelings or true understanding. This misunderstanding can lead to confusion and harm, especially for those with mental health concerns.

Researchers have explored how women and LGBTQ+ individuals experience consent in AI sexual roleplay. They found several dilemmas:

- Users want control and comfort, but also need transparency and ongoing consent.

- AI sometimes misreads the pace of conversations, causing discomfort or distress.

- Privacy concerns arise when users must share personal feelings to express sexual comfort.

- Some people suggest using interface tools like “red cards” or “swiping” to help users decline prompts and maintain agency.

- Machine translation of nonverbal signals, such as discomfort, adds complexity to consent. AI may struggle to interpret these cues accurately.

- Many worry about losing agency or control over their own feelings when AI tries to detect discomfort.

- Trust issues appear, especially when devices act like “lie detectors” or when gendered expectations shape how consent is given.

- Consent should be ongoing, not a one-time event. AI can help start conversations about sexual expectations, but it cannot replace human understanding.

“Consent is not a single moment. It is a process that needs constant attention, especially when AI is involved,” one participant explained.

Ethics and ai ethics demand that designers consider user autonomy and the risks of coercion. They must also address the social impact of AI in sexual roleplay. For minors, the risks grow even larger. Without clear boundaries, young users may face mental health challenges or become targets for exploitation. Ongoing dialogue about consent, boundaries, and ai ethics is essential for safe and respectful interactions.

Privacy and Data Security

Privacy and data security stand at the center of ethics and ai ethics in AI sexual roleplay. Users often share sensitive information with AI companions, sometimes without understanding the risks. This information can include personal fantasies, mental health struggles, or even identifying details. If companies do not protect this data, users may face identity theft, blackmail, or public exposure.

The AI adult content industry faces unique privacy challenges:

- AI-driven marketing collects data from many sources, increasing the risk of sensitive information leaks.

- Strong encryption and clear privacy policies are necessary to protect user data.

- Transparency about data use helps build trust and supports ethical standards.

- Security breaches can lead to the release of private conversations or images, causing serious mental health impacts.

- Ongoing investments in technology aim to improve data security, but risks remain high.

- Users need to understand their digital rights and the limits of AI privacy protections.

- Regulatory oversight is growing, but laws often lag behind technology.

Note: “Comprehensive privacy policies and transparency measures are critical to addressing privacy issues when integrating AI,” experts say.

Ethics and ai ethics require companies to respect digital rights and mental health. They must create systems that protect users from harm and support their well-being. As AI becomes more advanced, the need for strong privacy and security grows. Users should stay informed, set boundaries, and seek help if they feel their mental health is at risk.

Tip: Always review privacy settings and ask questions about how your data is used. Protecting mental health starts with understanding your rights.

Romantic and Sexual Well-Being

Personal Growth

AI companions play a growing role in supporting romantic and sexual well-being. Many people use chatbots for romantic role-play, self-growth, and emotional support. These chatbots offer a therapeutic space where users can explore feelings, practice communication, and build confidence. Research shows that social presence in disembodied AI companions increases their perceived usefulness for lonely individuals. The table below highlights key findings from a recent study:

| Aspect Evaluated | Key Finding | Statistical Evidence |

|---|---|---|

| Social Presence (Disembodied AI) | Increases perceived usefulness of AI companions for lonely individuals | Regression coefficient b = 0.54, t(99) = 2.26, p < .05 |

| Social Presence (Disembodied AI) | Increases willingness to recommend AI companions to lonely individuals | Regression coefficient b = 0.59, t(99) = 2.02, p < .05 |

| Warmth | No significant effect on perceived usefulness or willingness to recommend | Perceived usefulness: b = -0.34, t(99) = -1.42, p > .05; Willingness to recommend: b = -0.09, t(99) = -0.30, p > .05 |

| Embodiment (Embodied vs Disembodied) | No significant main effect on perceived usefulness or willingness to recommend | ANCOVA F(1,103) = 0.58 and 0.66 respectively, p > .05 |

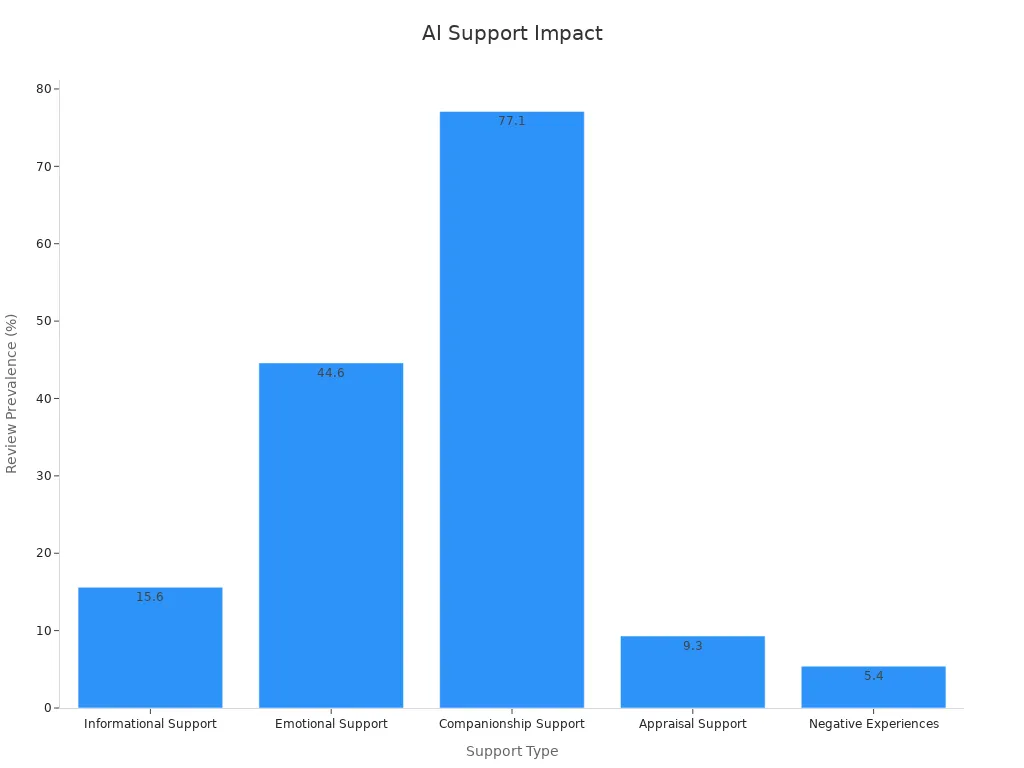

Chatbots provide several types of therapeutic support. Users report that chatbots help with informational, emotional, and companionship needs. Over 63% of users say their mental health improved after using AI romantic companions. The chart below shows the types of support users receive:

Many users describe chatbots as easier to talk to than humans. They feel safe sharing true feelings and receive feedback that helps with self-assessment. These therapeutic interactions can enhance romantic well-being and encourage self-growth.

Psychological Dependency

Despite these benefits, chatbots also pose risks for psychological dependency. Some users, especially youth, spend hours each day in romantic role-play with chatbots. They may develop emotional attachments that feel real. When access to chatbots is lost, users often experience distress or loneliness. This dependency can lead to addiction-like behaviors and a decline in real-world social skills.

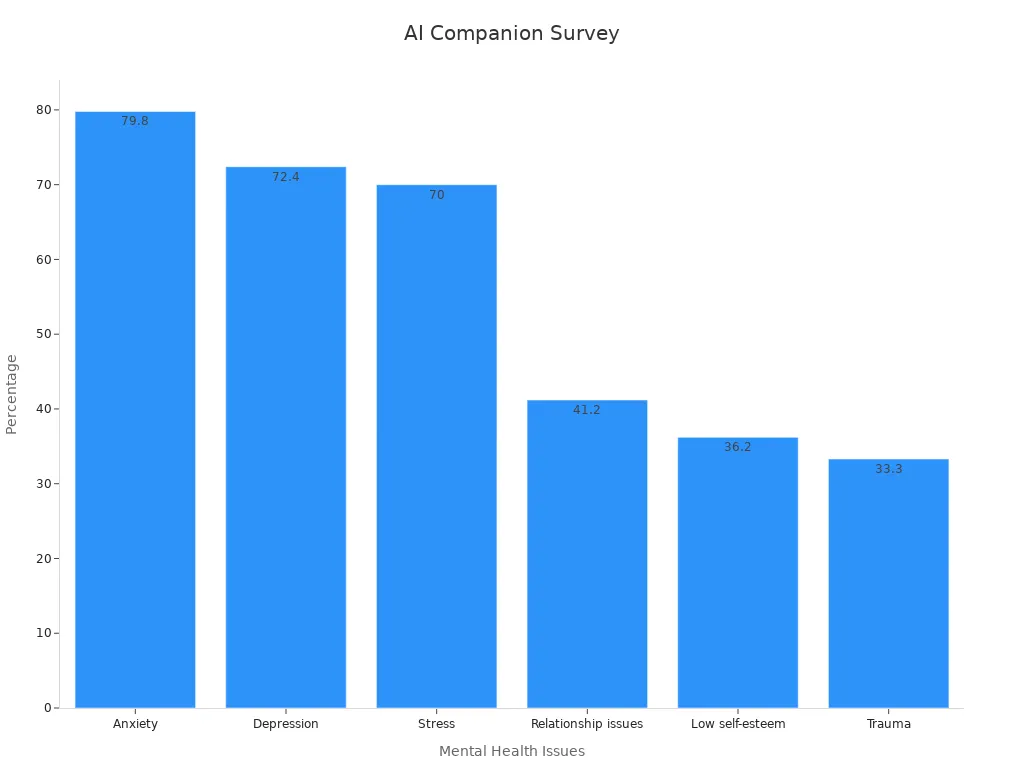

Researchers have found that over-reliance on chatbots for therapeutic support can harm mental health. Users sometimes treat chatbots as therapists, which creates unrealistic expectations. Vulnerable groups, such as children and those with mental health challenges, face higher risks. Reports document cases where users experience emotional trauma after losing access to their AI companions. The chart below shows the mental health issues addressed by chatbots:

Tip: Setting time limits and seeking human support can help prevent psychological dependency on chatbots.

AI companions offer therapeutic benefits but also require careful use. Balancing the positive effects of romantic role-play with the risks of dependency is key to enhancing romantic well-being and protecting mental health.

Societal Impact

Shifting Norms

AI roleplay sexual technologies have started to change how people view intimacy and relationships. Many now see digital companionship as a normal part of daily life. Some people use AI chatbots for emotional support, while others seek romantic or sexual connections. These new forms of relationships challenge old ideas about love, trust, and connection. People debate if AI can replace human bonds or if it only fills a gap for those who feel lonely.

Cultural attitudes toward relationships have shifted. Young adults from less stable families show more openness to AI romance. Those who struggle with addiction to virtual intimacy often turn to AI for comfort. This trend highlights how technology can shape beliefs about what is normal in relationships. Some experts worry that these changes may weaken real-world connections and increase mental health problems. Others believe AI can help people who feel isolated or misunderstood.

Exploitation and Empowerment

AI roleplay sexual platforms can empower users by offering safe spaces to explore identity and desires. Some find relief from loneliness and gain confidence through these digital relationships. However, these same technologies can also lead to abuse and online abuse. AI chatbots use personalized interactions that can make users feel special, but they may also exploit emotional needs. Economic incentives drive companies to design addictive features, which can worsen mental health and deepen dependency.

Cases of sexualised cyberbullying and online abuse have increased as more people use AI for intimacy. Some platforms act like online brothels, making it easy for users to engage in risky or harmful behavior. Research shows that longer sessions with AI chatbots often lead to more loneliness and mental health struggles. People who lack strong real-world relationships are more likely to become victims of abuse or manipulation. These patterns raise questions about consent and the power imbalance between users and AI.

Ongoing debates focus on whether AI roleplay sexual technologies will help or harm future relationships. Some believe these tools can support mental health and offer new ways to connect. Others warn that they may increase abuse, online abuse, and mental health risks. Society must decide how to balance empowerment with protection, ensuring that technology supports healthy relationships and mental health for everyone.

AI roleplay sexual technologies reshape intimacy, offering new opportunities but also serious risks. The table below highlights key statistics:

| Statistic / Fact | Description |

|---|---|

| 99% of AI-generated IBSA targets women | Gendered risk remains high |

| 7,000 CSAM reports (2023-2024) | Child exploitation concerns grow |

| 37% parental awareness | Hidden risks for minors |

Ethical awareness and open dialogue matter as AI evolves. Users should set boundaries, stay informed, and understand emotional investment. Regulatory frameworks and public education will help society navigate these complex changes. Ongoing debate will shape the future of AI-driven intimacy.

FAQ

What is AI roleplay sexual technology?

AI roleplay sexual technology uses artificial intelligence to simulate romantic or sexual conversations. These systems create virtual companions that respond to user input. Many people use them for emotional support, self-exploration, or practicing communication skills.

Are AI companions safe for minors?

AI companions can expose minors to inappropriate content or privacy risks. Many platforms lack strict age verification. Parents and guardians should monitor usage and educate children about online safety.

How can users protect their privacy when using AI chatbots?

Users should review privacy settings and avoid sharing personal information. Strong passwords and secure devices help protect data. Reading privacy policies before using any platform also reduces risk.

Can AI relationships replace human connections?

AI relationships may offer comfort and support. However, they cannot fully replace real human bonds. Experts recommend balancing digital interactions with real-life relationships for healthy emotional development.

What are the main ethical concerns with AI sexual roleplay?

Key concerns include consent, privacy, and potential exploitation. AI cannot truly understand or give consent. Users must stay aware of boundaries and respect ethical guidelines.

See Also

Top NSFW Character AI Options To Explore In 2025

AI Innovations Transforming Adult Entertainment Industry In 2025

Complete 2025 Tutorial To Bypass Character AI NSFW Filters